- Valley Recap

- Posts

- 👨🏽💼 How tech-sector layoffs are hiding the biggest compute shift of the decade 🦾 Agentic Enterprise @ McKinsey — Event Recap 🤓 Enterprise AI Day DEC11

👨🏽💼 How tech-sector layoffs are hiding the biggest compute shift of the decade 🦾 Agentic Enterprise @ McKinsey — Event Recap 🤓 Enterprise AI Day DEC11

The Stealth Arrival of AI Inference

How tech-sector layoffs are hiding the biggest compute shift of the decade

The Layoff Alarm Bells

More than 100,000 jobs have been cut across major tech firms in 2025 — not because demand vanished, but because the work did.

Microsoft trimmed nearly 9,100 roles (≈ 4 % of its workforce) while committing more than $80 billion to AI infrastructure this year. (Reuters)

Amazon cut 14,000 corporate positions, calling AI “the most transformative technology since the internet.” (The Verge)

Even Meta’s Super intelligence Labs let go of ~600 engineers — not to scale back, but to concentrate resources on fewer, “load bearing” AI teams. (Business Insider)

“In S&P Global Market Intelligence surveys conducted in 2024 and early 2025, organizations broadly expressed expectations that AI would reshape existing work by boosting efficiency and redistributing tasks, rather than causing widespread job losses.” (S&P Global)

These are not cyclical layoffs. They are strategic reallocations — human labor giving way to model inference. Training may still make headlines, but serving those models at scale has become the real industrial revolution. We have seen such shifts in staffing in the past, and they often signal a significant event underway in the market.

The overlooked inflection point

Public attention still centers on model training, billion-dollar clusters and headline-grabbing releases. Behind the scenes, inference workloads, the act of running those models for billions of daily tasks, have quietly surpassed training in operational importance. Making models costs a lot of money. Using the models generates revenue, enhances products and serevices. And as a consequence, it also redefines job roles and workloads.

Every chat reply, code completion, or agentic decision burns inference compute. It’s the true economy of AI, expanding much faster than most realize. Tracking GPU shipments or cluster spending alone is disguising the shift that has started to occur — the growth of AI Inferencing.

As Lisa Su, CEO of AMD, put it, “We always believed that inference would actually be the driver of AI going forward, and we can now see that inference inflection point. … we now expect that inference is going to grow more than 80% a year for the next few years, becoming the largest driver of AI compute…” (Fierce Electronics)

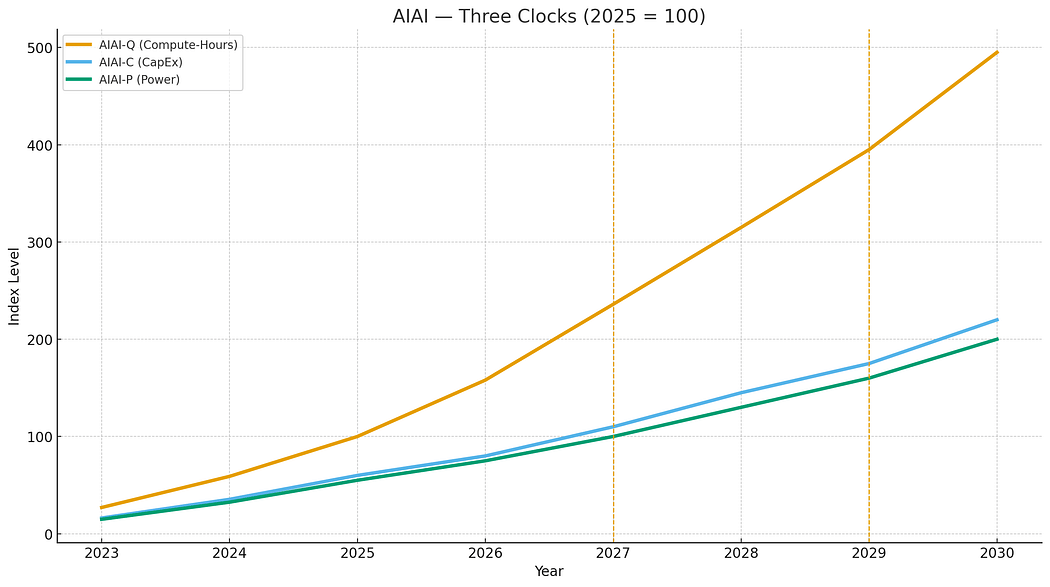

Measuring what’s really happening

At Pascaline Systems, we wanted a way to measure the true pulse of AI — not by revenue, not by chips shipped, but by usage. So we built the AI Inference Activity Index (AIAI), a metric that tracks compute-hours rather than dollars. We derived this index from global accelerator shipments, utilization ratios, and power data from Dell’Oro, Omdia, and the IEA. This index monitors the number of active accelerators and their average utilization. It captures how much compute time the world actually spends on AI, divided between training and inference. The results show how fast inference is taking over compute time.

Year | Inference Share | Training Share |

2023 | 25 % | 75 % |

2025 | 35 % | 65 % |

2027 | 55 % | 45 % |

2030 | 65 % | 35 % |

According to the AIAI index, inference overtakes training in compute-hours by 2027, roughly two years earlier than most syndicated forecasts on when inference will overtake training. By 2030, inference represents nearly two-thirds of all AI compute time.

Why earlier? Because other forecasts track spends, not use. Training clusters cost more per GPU, so their financial footprint lingers even as runtime dominance shifts.

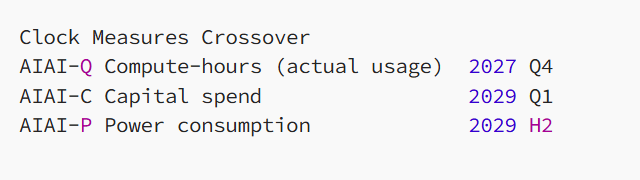

Three clocks, one story

To compare lenses, we translated the compute-hour curve into capital and power equivalents:

Usage leads. Spend follows. Power locks in last.

All three curves point in the same direction — the global compute economy is tilting toward inference. As the AI models become more capable and more efficient, the industry will reach a point that money and effort will shift from developing the models to using them for effective work.

A market hiding in plain sight

The AIAI Index was not meant to predict markets. But once we plotted global compute-hours instead of dollars, the picture became impossible to ignore.

Figure 1. AIAI-Q — Global AI Compute-Hours (2025 = 100). Inference overtakes training by 2027.

The AIAI-Q curve rises faster than what financial metrics can capture because it measures what’s actually running, not what’s being budgeted. It is actually a leading indicator for utilization is surging faster than capital or power infrastructure can adapt.

A recent RAND Corporation analysis projected that in 2025, AI data center demand will likely reach about 21 GW of total power capacity, more than a fourfold increase from 2023 and twice the total power capacity of the state of Utah. (RAND 2025, EIA 2024). When compared with our AIAI-Q index, the trajectories align closely, pointing to a total AI power draw near 65 GW by 2030.

That number isn’t the headline — the timing is.

The RAND projection also implies the infrastructure ramp must begin years earlier than the official investment cycle anticipates. It takes time to build the data centers. Yet most capital allocation models still treat inference as a future load rather than an imminent one that calls for immediate actions.

This is where the opportunity lies. The utilization is leading valuation by two years. That’s exactly what the AIAI shows: usage (AIAI-Q) inflects in 2026–2027, while capital (AIAI-C) won’t catch up until 2029. For data-center investors, this is the stealth entry point — the moment when inference demand is visible in operations but not yet visible on balance sheets. By the time CapEx trends confirm the shift, the edge will be gone.

We entered the 100–150 band in late 2025 — the zone where early infrastructure investors can earn the largest compounded returns. Waiting until 2027 means missing that sweet spot. The signal is unmistakable. The question now is how to build for this new era.

From Recognition to Action

So what exactly should I bet on? Training rewards scale, which leads to vast, centralized campuses tuned for peak throughput and batch efficiency. Inference rewards responsiveness. It needs an architecture optimized for latency, concurrency, and proximity to data and users. The designs, cooling systems, interconnects, and even rack-level power densities will evolve accordingly. The winners in the next cycle will not be the builders of bigger clusters, but the architects of faster, smarter, more distributed compute fabrics. The inference era will demand new hardware, new memory systems, and new network topologies. It will be how the next phase of data center value gets created. We’ll explore what an inference centric data center will look like and what technologies it will need in our future article.

Like the 2009 shift from enterprise servers to cloud, early adopters captured disproportionate returns.

Conclusion

Inference is already here. It is just not where analysts are looking. The layoffs across major tech firms aren’t signs of contraction. They’re evidence that AI has quietly taken over operational workloads. Every Copilot query, customer-service chatbot, internal coding agent, or decision-support prompt runs millions of inference cycles each day. These cycles don’t appear as new revenue lines or CapEx events. They appear as shrinking payrolls and faster task completion. In other words, the compute-hours are hidden inside software, not inside data-center announcements. Inference isn’t the next phase of AI. It is already the phase we are living in.

Author: Jay Chiang, Pascaline Systems Inc.

Series: AI Infrastructure Research №1 (2025)

Jay Chiang is a co-founder of Pascaline Systems Inc., developing cognitive-storage and AI-infrastructure technologies that connect compute to trusted knowledge. He writes about AI systems, data centers, and the economics of intelligence.

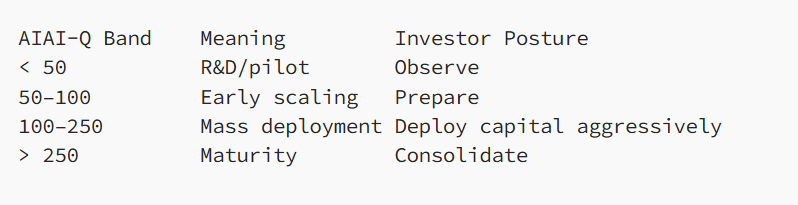

Bill Barry & Daniel Anger provide welcome remarks

Agentic Enterprise @ McKinsey — Event Recap

What do we do in Silicon Valley on a Friday night? We gather to explore the future of Agentic Enterprise. Yesterday, we filled McKinsey & Company’s beautiful Redwood City office with founders, operators, and innovators for an evening of sharp insights, lively conversation, and community building.

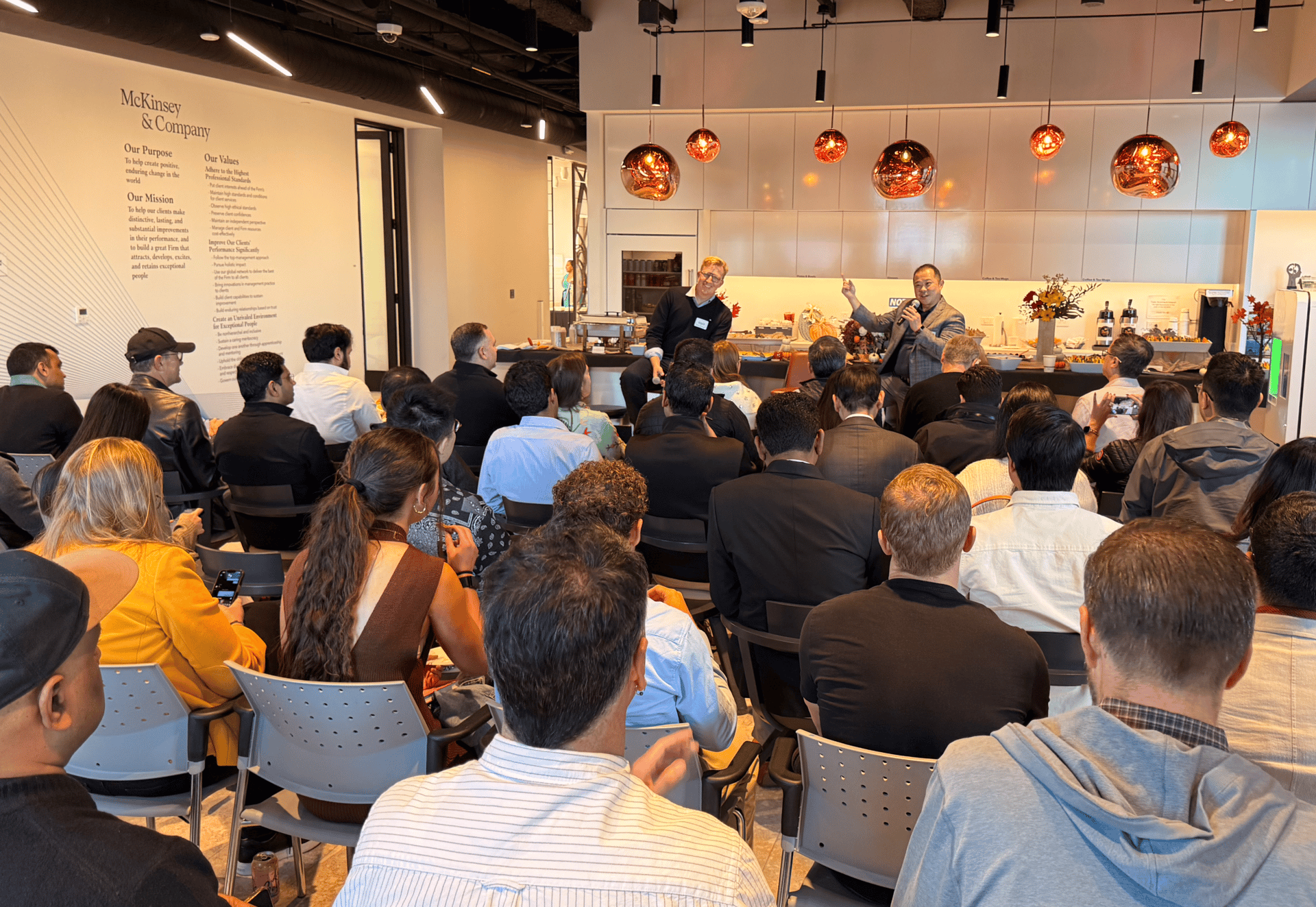

Nandita Bothra, Associate Partner of Mckinsey opened the evening by moderating a panel with James Urquhart, CTO of Kamiwaza.ai, and Okhtay Azarmanesh, Principal AI Architect at Snowflake. Their discussion dove into how autonomous AI is accelerating business velocity and reshaping what execution looks like in enterprise environments.

James Urquhart, Okhtay Azarmanesh and Nandita Bothra discuss Autonomous AI

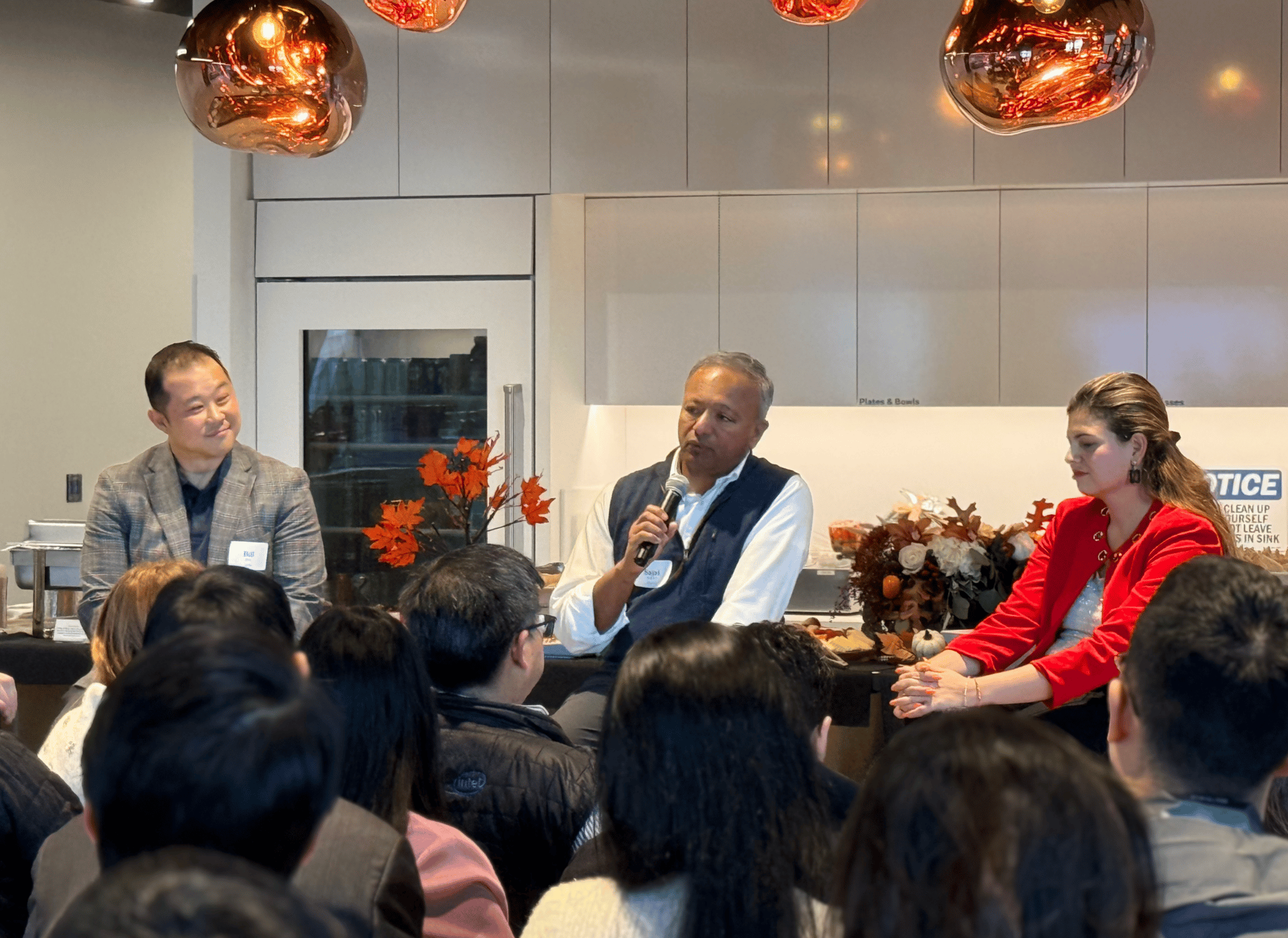

Bill Barry followed with a second panel focused on Redefining Performance with Autonomous Agents, joined by Sajai Krishnan, Head of Strategy at Clarifai, and Mariane Bekker, Head of Dev Relations at You.com. Together, they explored the emerging performance paradigms made possible by agentic systems—and what organizations must rethink to keep pace.

Bill Barry moderates a panel with Sajai Krishnan and Mariane Bekker

A huge thank you to our friends at McKinsey for hosting us with such warmth (and an excellent spread). It was a full house, a fantastic atmosphere, and a strong reminder of the momentum building around autonomous enterprise technologies.

Onwards and upwards! 🚀

Upcoming Events

We’re heading into the final stretch of 2025 with a powerful lineup of events centered on real-world enterprise AI adoption.

On Dec 11th Supermicro and AMD will host Enterprise AI Day at the stunning Hotel Valencia Santana Row, a half-day gathering featuring enterprise-leading panels and holiday-season networking.

Finally, on December 16, we’re teaming up with the AI Tech Leaders Club to close out the year with a hands-on workshop designed specifically for senior Technical Decision Makers in Snowflakes office in Menlo Park.

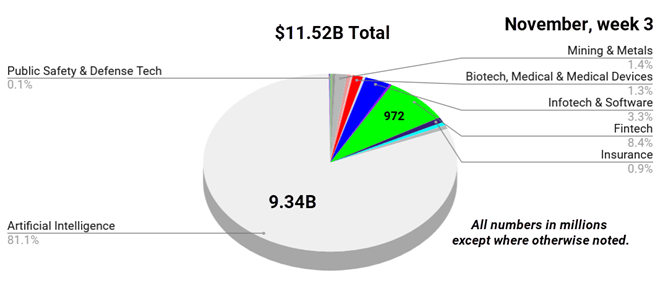

Bay Area Startups Collectively Secured $11.52B this week

Bay Area startups closed on $11.52B in fundings in week 3 of November, taking the month-to-date to just over $17.61B. One six billion-dollar-plus mega deal and eight more megadeals all contributed to that total, with Project Prometheus' $6.2B combined seed and Series A the largest this week – and so far this month.

2025 deal flow: According to Carta, more deals close in the third week in November than any other week of the year. The number of fundings this week - 55 new rounds – supports that. The deal flow is reminiscent of the ZIRP (zero interest rate period) 2021-22 period in deal numbers but not in deal amounts. The amounts invested YTD in 2025 are much larger - both in total and per deal - than the equivalent period in 2021. Total SV fundings in 2021 were ~ $121B; 2025 YTD exceeded that figure in mid-September.

AI concerns: An increasing number of voices have been expressing concern over AI valuations and perceived risks. This week, tech stocks reacted, sliding for most of the week. Nvidia was not immune - despite a stellar Q3 earnings report that exceeded expectations and Q4 sales projection of $65B, an increase of $11B, their stock price took a hit. The market rallied on Friday but ended the week down.

The Venture Market Report for Q3 2025 is now posted for browsing online. Curious about fundings and venture funds raised? And money coming back into the valley through M&A and IPOS? The data for the Venture Market Report comes directly from the Pulse of the Valley weekday newsletter and summarizes fundings by sector and series, acquisitions and IPOs (with details) plus new funds raised by investors and new VC firms launched. Check it out and sign up for a 1 week free trial of the Pulse while you're there.

For sales people and service providers looking for who's just closed new fundings: The Pulse of the Valley weekday newsletter keeps you current with capital moving through the startup ecosystem in SV and Norcal. Startups raising rounds and their investors; investors raising and closing funds; liquidity (M&A and IPOs) and the senior executives on both sides starting new positions. Details include investor and executive connections + contact information on tens of thousands of fundings. Check it out with a free trial, sign up here.

Follow us on LinkedIn to stay on top of what's happening in 2025 in startup fundings, M&A and IPOs, VC fundraising plus new executive hires & investor moves.

Early Stage:

Project Prometheus closed a combined $6.2B seed and Series A, developing AI for the physical economy.

Profluent Bio closed a $106M Series A, an AI-first company pushing the frontier of de novo protein design to author new biology.

Archetype AI closed a $35M Series A, the Physical AI company helping humanity make sense of the real world.

Wispr Flow closed a $25M Series A, (available on Mac, iPhone and Windows) lets you speak naturally and see your words perfectly formatted—no extra edits, no typos.

Dryft AI closed a $5M Seed, builds artificial intelligence that mimics, optimizes, and automates complex human decisions in manufacturing operations.

Growth Stage:

Lambda Labs closed a $1.5B Series E, GPU cloud and on-prem hardware enables AI developers to easily, securely and affordably build, test and deploy AI products at scale.

Luma AI closed a $900M Series C, building a multimodal general intelligence that can generate, understand, and operate in the physical world.

Kraken closed a $800M Series Unknown, one of the world’s longest-standing and most secure crypto platforms globally.

Physical Intelligence closed a $600M Series B, bringing general-purpose AI into the physical world, developing foundation models and learning algorithms to power the robots of today and the physically-actuated devices of the future.

KoBold Metals closed a $163M Series C, discovers the materials critical for the renewable energy revolution using AI and HI.

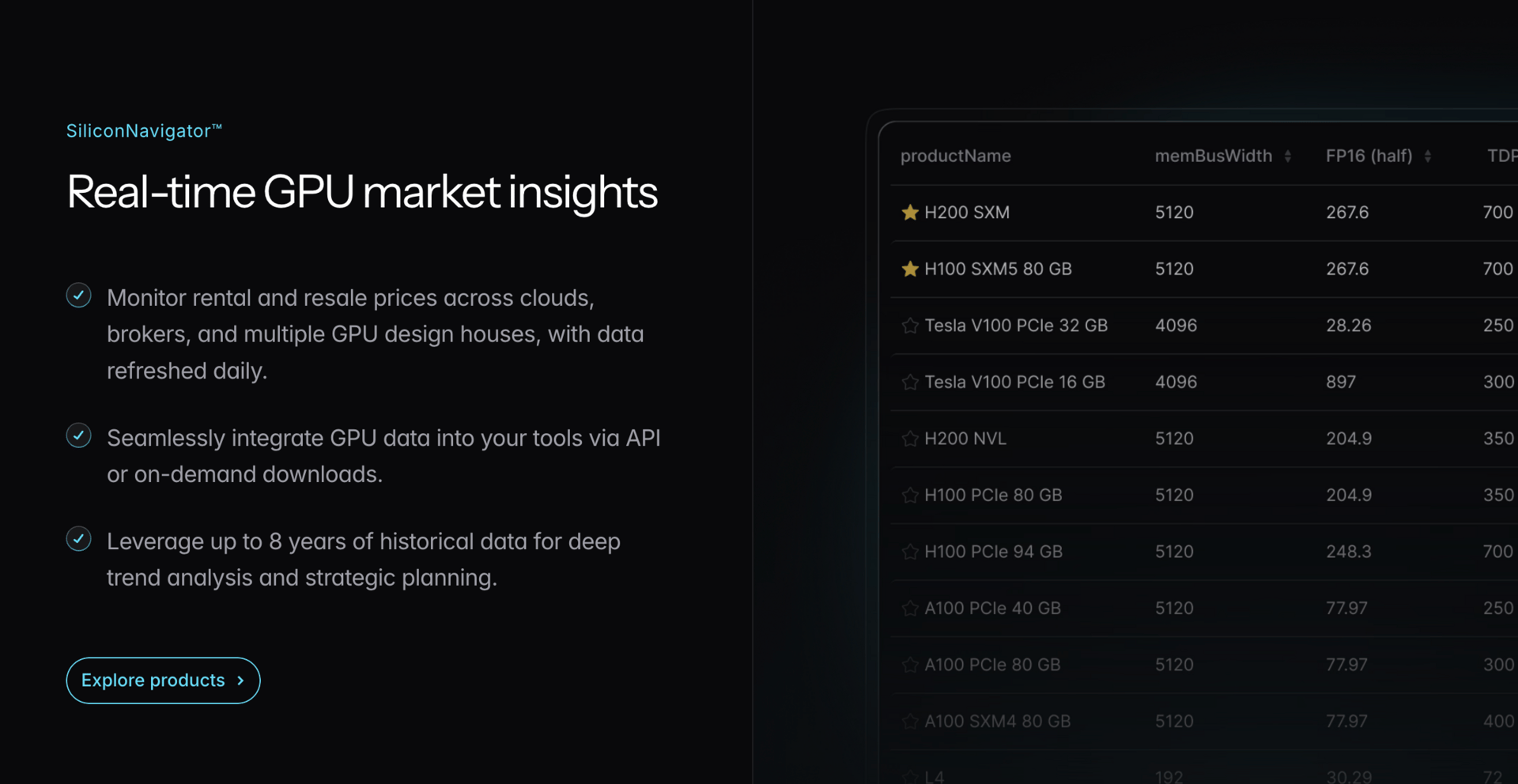

Silicon Data

Silicon Data provides market intelligence for the global compute economy. The company tracks real-time GPU rental and resale prices, offers independent performance benchmarks, and provides carbon impact data for AI infrastructure.

What Silicon Data Delivers

• Live GPU pricing across clouds and brokers

• Independent benchmark testing through SiliconMark

• Predictive pricing and market forecasting

• Carbon footprint analysis for compute workloads

Why It Matters

Compute is becoming one of the most valuable resources in AI. Transparent data helps buyers, builders, and investors make smarter infrastructure decisions, negotiate contracts with confidence, and monitor performance claims.

Who They Serve

Financial institutions, AI companies, cloud customers, and data center operators seeking clarity and cost control across their compute strategies.

Learn more at silicondata.com.

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]

Logan Lemery

Head of Content // Team Ignite

Find customers on Roku this holiday season

Now through the end of the year is prime streaming time on Roku, with viewers spending 3.5 hours each day streaming content and shopping online. Roku Ads Manager simplifies campaign setup, lets you segment audiences, and provides real-time reporting. And, you can test creative variants and run shoppable ads to drive purchases directly on-screen.

Bonus: we’re gifting you $5K in ad credits when you spend your first $5K on Roku Ads Manager. Just sign up and use code GET5K. Terms apply.