- Valley Recap

- Posts

- Happy Thanksgiving🦃 How GPU Memory Now Decides AI Performance 🦾 Enterprise AI Day 👨🏽💼

Happy Thanksgiving🦃 How GPU Memory Now Decides AI Performance 🦾 Enterprise AI Day 👨🏽💼

How GPU Memory Now Decides AI Performance 🦾

At AI INFRA SUMMIT 2025 in San Francisco, a consistent theme emerged across operator sessions. The next performance unlock in AI is no longer coming from larger clusters or higher FLOPs. It is coming from memory. Real throughput depends on how well systems move, store, and reuse context. The teams scaling inference at meaningful volume kept pointing to the same constraint. The GPU only performs well when its memory pathways stay clear.

Training shaped the first phase of the industry, but inference is defining the second. This shift shows up in Pascaline’s research* which tracks compute-hours rather than capital spending. Their AI Inference Activity Index finds that inference workloads are growing faster than the training footprint. That trend supports what operators see daily. Inference runs continuously, without the fixed start and stop cycle of training. Long prompts, retrieval layers, expanded context windows, and agentic reasoning all increase how much state must sit in memory.

Memory pressure rises fastest during inference because it touches every part of the stack. Each request triggers a sequence of transfers across HBM, DRAM, PCIe, and storage. Even small inefficiencies have visible impact. Latency climbs when KV caches grow too large for available HBM. Throughput drops when models stall waiting for context. Operators often find that GPUs with strong theoretical specs underperform once they become memory-bound.

The new generation of agentic systems widens this gap. Models that plan, branch, and retry produce far more tokens per query. Research notes that multi-agent reasoning can raise token usage by an order of magnitude. More tokens translate directly into more KV state and heavier memory movement. Shimon Ben-David from WEKA presented on this at AI INFRA SUMMIT

This is where storage orchestration enters the picture. Instead of holding all session state inside HBM, operators are beginning to offload portions of the cache to fast storage layers. When storage behaves like an extension of memory, active HBM stays available for computation. This can raise utilization and cut energy waste without adding hardware. The strongest operators view storage and memory as a shared plane rather than separate systems.

New performance metrics reflect this transition. Teams now measure bandwidth per request, state residency, cache hit ratios, and the cost of memory hops. They treat context like a first-class resource, planning siting strategies around reducing memory travel between regions. They build deterministic pathways when workloads run near the physical edge, where jitter is unacceptable.

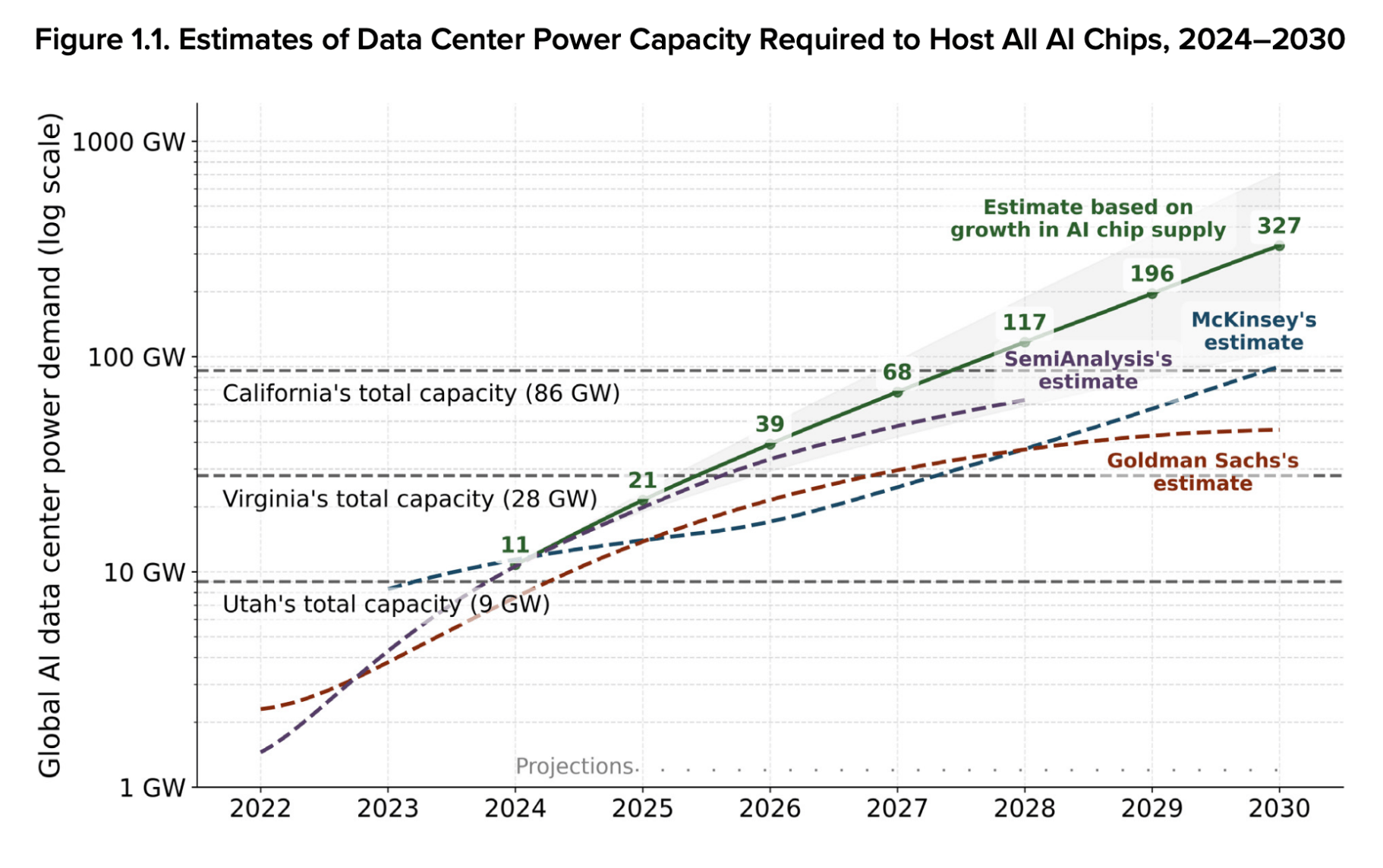

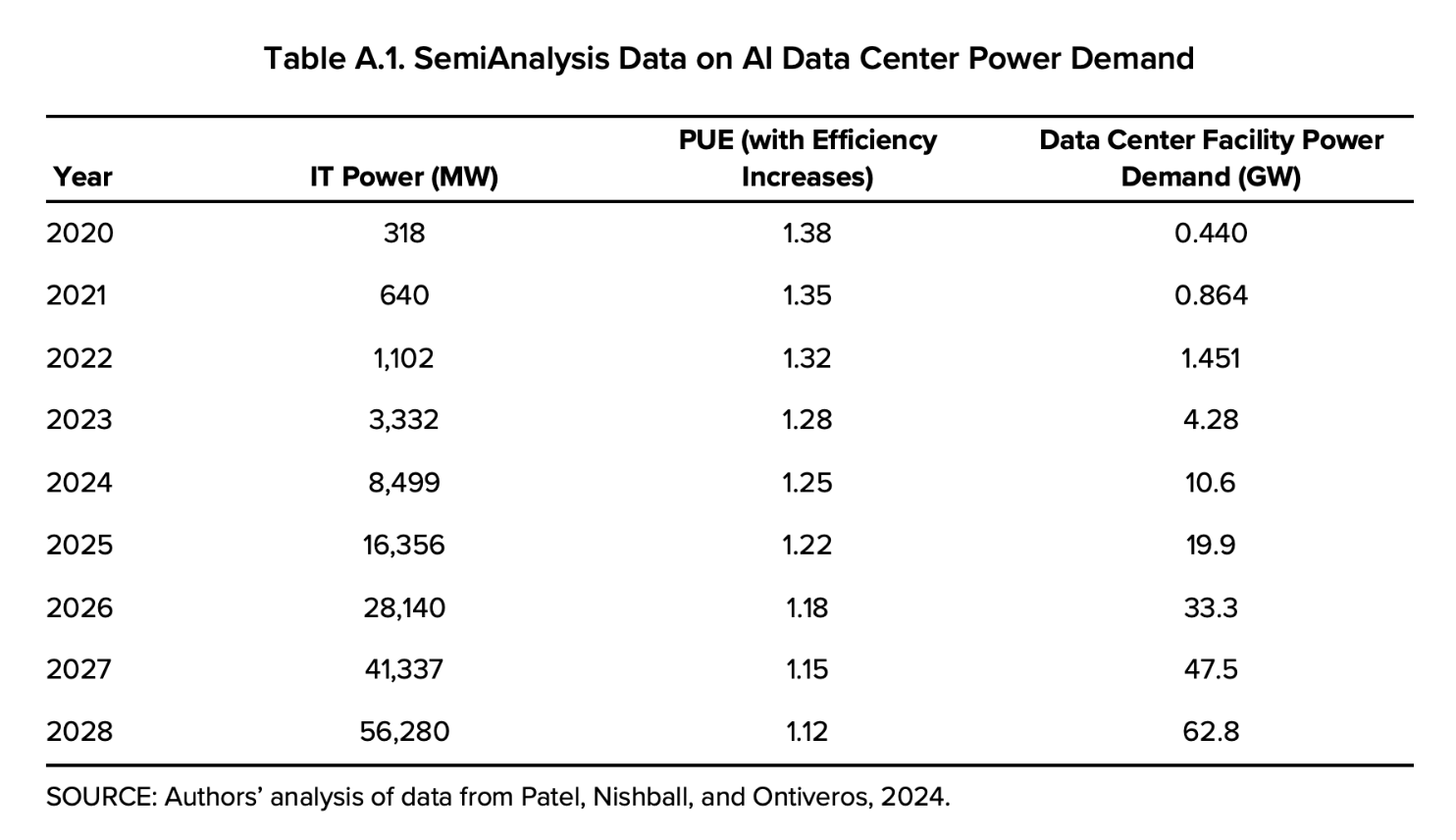

Data-center constraints reinforce the urgency. Power availability is tightening and build times remain long. RAND projects AI loads rising quickly through the decade. When combined with the memory-heavy nature of inference, it signals a mismatch between usage growth and infrastructure timelines. Operators cannot rely on hardware expansion to absorb the pressure.

This is why memory has become a strategic priority. The industry is moving toward designs that emphasise data flow rather than peak FLOPs. Modern inference depends on how well systems manage KV state, context retrieval, and cache structure. The most competitive operators will be the ones who build around memory efficiency from the start.

The next wave of progress in AI infrastructure will come from better pathways, smarter caching, and more capable storage integration. These choices shape cost, energy use, and user experience more than raw scale. Once memory becomes the lens, the path forward is clear. The systems that move data with the most precision will deliver the highest performance.

Upcoming Events

On Dec 11th Supermicro and AMD will host Enterprise AI Day at the stunning Hotel Valencia Santana Row, a half-day gathering featuring enterprise-leading panels and holiday-season networking.

Finally, on December 16, we’re teaming up with the AI Tech Leaders Club to close out the year with a hands-on workshop designed specifically for senior Technical Decision Makers in Snowflakes office in Menlo Park.

Aiify.io has increased our HealthTech Week discount to 30% in honor of Thanksgiving Week! Join us at HealthTech Week 2026 (coinciding with JPMorgan Healthcare Conf), which features the 3-day HealthTech Summit (Jan 14-16). Thanksgiving discounts are available, and you can use our code, “IGNITE30” for an additional 30% off.

This link automatically adds the code (discount is visible at checkout): https://luma.com/HTSummit=IGNITE30

Visit the event site for more information, including confirmed speakers and special events like their Startup Pitch Competition and the world's first HealthTech Wearables Fashion Show: http://HealthTechWeek.org

Bay Area Startups Collectively Secured $18B in November

Bay Area startups closed on $319M in a short week 4 of November to end the month with total funding of $18B, over four billion higher than the previous November high of $13.9B in 2021. There were twenty-one megadeals in November, with three of them billion-plus rounds: Project Prometheus' $6.2B, Anysphere's $2.3B and Lambda Labs $1.5B. In total, megadeals accounted for $15.1B or almost 85% of the capital raised in November.

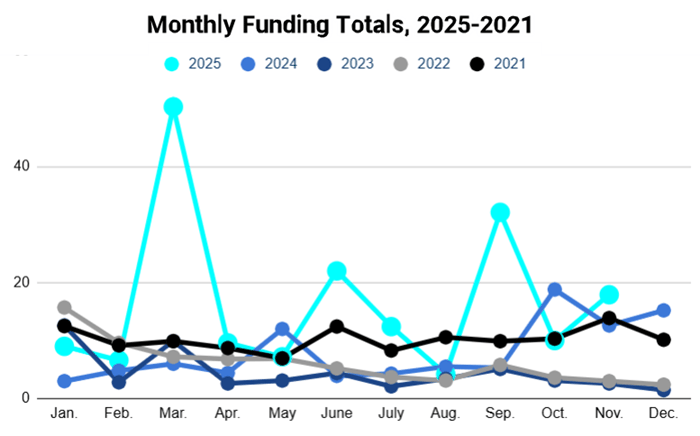

2025 funding has already exceeded the total raised in 2021, the previous high point. Artificial intelligence – both AI infrastructure and applied AI – has captured the majority of the funding this year by a wide margin. And huge rounds – headed up by the $40B raised by OpenAI early in the year – have spiked funding in a way that we haven't seen since 2021. The chart below cover monthly funding totals for the last five years, and while we still have another month to go this year, 2025 is going to mark a new high.

For startups raising capital and sales people and service providers looking for who's just closed new fundings: The Pulse of the Valley weekday newsletter keeps you current with capital moving through the startup ecosystem in SV and Norcal. Startups raising rounds and their investors; investors raising and closing funds; liquidity (M&A and IPOs) and the senior executives on both sides starting new positions. Details include investor and executive connections + contact information on tens of thousands of fundings. Check it out with a free trial, sign up here.

Follow us on LinkedIn to stay on top of what's happening in 2025 in startup fundings, M&A and IPOs, VC fundraising plus new executive hires & investor moves.

Early Stage:

Momentic closed a $15M Series A, the AI-native testing platform that makes sure your product is working the way it should on every release.

HERMES Biosciences closed a $6M Seed, developing automated technology that isolates and analyzes intact extracellular vesicles (EVs) from biological samples.

Parallax Worlds closed a $4M Seed, provides advanced reliability testing software for robots to stress test them in hyper-realistic simulations to find and fix failure cases before they occur in the real world.

Freya closed a $3.5M seed round, building Voice AI that sounds human, for enterprise call centers that handles inbound and outbound calls, from support and service to sales and beyond, fluently in dozens of languages, 24/7.

The Intelligent Search Company closed a $2.1M pre-seed, giving humans and AI agents the ability to find the right needles in any haystack, by fusing contextual insights across live, multi-modal data streams and retrieving exactly what matters in real time

Growth Stage:

Harmonic Math closed a $120M Series C, developing Mathematical Superintelligence (MSI), the next generation of artificial intelligence which is rooted in mathematics and which guarantees accuracy and eliminates hallucinations.

Netomi closed a $43M Series C, delivers the only agent-centric CX platform, a full-stack, omnichannel solution that orchestrates runtime-reasoning agents that plan, act, and continuously optimize customer interactions across chat, voice, email, and messaging.

Numerai closed a $30M Series C, provides a new kind of hedge fund built by a network of data scientists.

Synexis

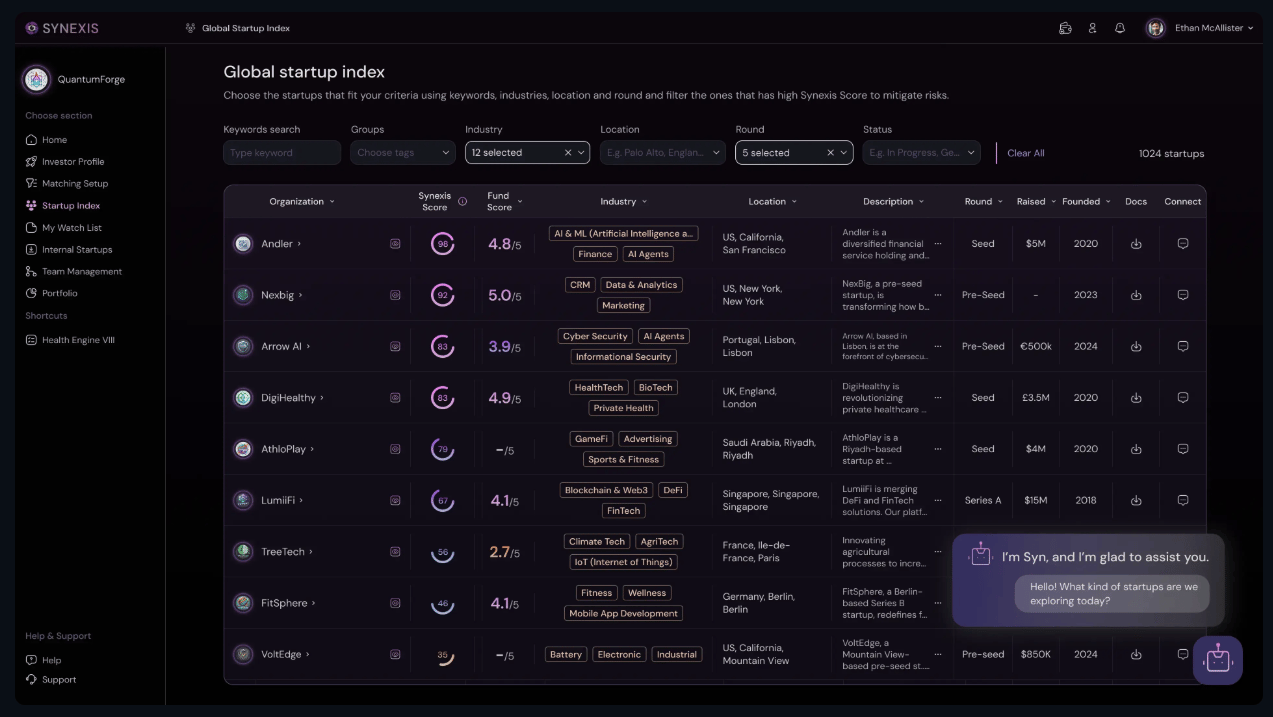

Synexis is an AI-native deal sourcing and intelligence platform built to modernize how investors, startups, and enterprises discover, evaluate, and manage opportunities.

What Synexis Delivers

• AI-driven deal matching and startup discovery

• Tools for diligence, documentation, and data organization

• Structured workflows for evaluating and tracking opportunities

• Transparency across startup profiles, assets, and milestones

Why It Matters

Deal sourcing is slow, fragmented, and built on manual processes. Synexis replaces scattered data with structured insight, helping teams move faster and with greater confidence.

Who It Serves

Investors, venture firms, startups preparing to raise capital, and enterprises exploring partnerships or acquisitions.

Learn more at synexis.io.

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]

Logan Lemery

Head of Content // Team Ignite

Get a Senior Growth Team to Review Your Marketing Strategy.

Galactic Fed’s senior growth team has scaled brands like Varo, Edible, and Quiznos. And now they’re opening a limited number of free 1:1 sessions with these growth geniuses to help ambitious founders find their next breakthrough.

They’ll dig into your traffic, funnels, and ad performance to surface what’s working, what’s wasting money, and where your fastest growth levers really are.

Spots are limited, but one session could change your next quarter or your entire fiscal year.